At Lynchpin, we’ve spent considerable time testing and deploying Luigi in production to orchestrate some of the data pipelines we build and manage for clients. Luigi’s flexibility and transparency make it well suited to a range of complex business requirements and to seamlessly support more collaborative ways of working.

This blog post draws from our hands-on experience with the tool – stress-tested to really understand how it performs day to day in real-world contexts. We will begin by walking through what Luigi is, why we like it, where it could be improved, and share some practical tips for those considering using it to enhance their data orchestration processes and data pipeline capabilities.

What is Luigi and where does it sit in the data pipelines market?

Luigi is an open-source tool developed by Spotify that helps automate and orchestrate data pipelines. It allows users to define dependencies and build tasks with custom logic using Python, offering flexibility and a fairly low barrier to entry for its quite complex functionality.

Despite Spotify’s introduction of a newer orchestration tool, Flyte, Luigi is still widely used by many major brands and continues to receive updates – allowing it to continually mature and become a reliable choice for a range of data orchestration use cases.

Luigi sits amongst many popular tools used for data orchestration in the data engineering space – some of which are paid, while others are similarly open source.

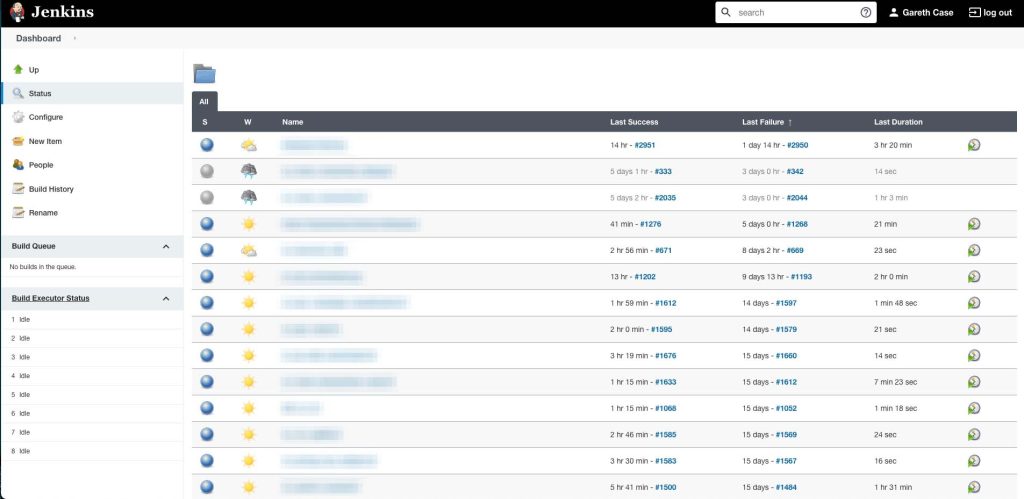

Another tool we’ve used for data orchestration is Jenkins. Although it isn’t designed for more heavy-duty pipelines, we’ve found it to work very well as a lightweight orchestrator, managing tasks and dependencies.

In the following section, we’ll break down some benefits of using Luigi for your data pipelines and a few reasons why you may choose it over a comparable tool such as Jenkins.

What we like ✅

Transparent version control:

One of the key advantages of Luigi is that it’s written in Python. This gives you transparent version control over your data pipelines – every change is committed and traceable: you know exactly what change has been made, you can inspect it, you can see who did it, and when it was done. This becomes even more powerful when linked to a CI/CD pipeline, which we do for some of our clients, as this means that any change to the pipeline in the repository is automatically the truth.

With Jenkins, for example, changes can be made and it’s not necessarily obvious what was changed or by which team member (unless explicitly communicated) – which becomes increasingly important when you’re managing more complex data pipelines with many moving parts and dependencies.

Dependency handling and custom logic capabilities:

Managing data pipeline dependencies is where Luigi truly stands out. In a tool like Jenkins, downstream tasks can be orchestrated but this often requires careful scheduling or wrapper jobs, which can get complicated and quite manual as a process depending on the complexity of your needs. Luigi simplifies this and enables smoother levels of automation by allowing you to define all dependencies directly in Python, allowing for logic such as: ‘Run a particular job only after a pipeline completes, and only do this on a Sunday or if it failed the previous Sunday.’

This level of custom logic is trivial in Python but can be difficult to replicate in Jenkins, where perhaps the only option is to run on a Sunday without any conditions surrounding it.

Pipeline failure handling:

Luigi considers all tasks idempotent. Once a task has run, it’s marked as ‘done’ and won’t be re-run unless you manually remove its output. This is a particularly useful feature if you have big, complex pipelines and only need to re-run certain jobs that have failed. You won’t need to re-run everything, but can find the failed task, delete its output file, and save time when re-executing the job.

Backfilling at the point of a task:

Luigi handles backfilling easily by allowing users to pass parameters directly into tasks.

This allows you to retrieve historical data (for example, backfilling from the beginning of last year to present) without having to change the script or config files.

Luigi will treat tasks with parameters like new tasks, so if the job had previously run, it’ll recognise the changed parameters and simply pass those parameters through.

Efficiency to set up, host, and use alongside existing infrastructure:

While tools such as Apache Airflow may require a Kubernetes cluster (and more) to begin running, Luigi, by contrast, is far simpler to host. You can run it on a basic VM (Virtual Machine) or through a tool like Google Cloud Platform, using a Cloud Run job. This makes it a great choice for smaller data pipelines or client-specific pipelines where you may want to decouple from the main infrastructure.

Market maturity and active use and development by many large brands:

Luigi is used by many users – including a host of major brands over the years, such as Squarespace, Skyscanner, Glossier, SeatGeek, Stripe, Hotels.com, and more. This is integral to its maintenance and viability as a good open-source tool. Its core functionality rarely changes, making it a stable and reliable choice for users; We found that any updates we’ve experienced are primarily focused on maintaining security rather than big rehauls to its functionality, which brings us to a few of its shortfalls…

What we don’t like ❎

Limited frontend and UI:

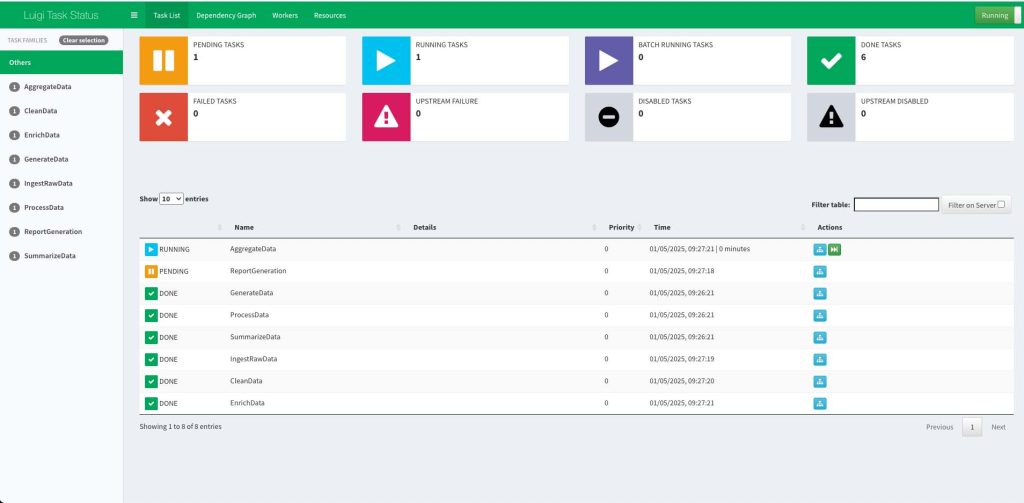

Luigi’s frontend leaves a lot to be desired. Firstly, it only really shows you jobs that are running or have recently succeeded in running, so if you have many running jobs in one day, the History tab fails to give you a strong overview of information.

When something fails, you’ll be notified, and you can inspect logs in a location that you previously specify, however it would be nice if the frontend provided a good summary of this information instead.

Workarounds do exist, such as saving your task history (e.g., tasks that ran, the status, how long they took, etc) in a separate table (for example, Postgres) where it can be visualised in an external run dashboard – providing a more personalised frontend for better monitoring, visibility into run times, failure rates, and so on.

Setting something like this up would provide more feature parity with a tool such as Jenkins, which, by contrast, does a great job at providing stats and visual indicators for task history, job health, what’s running, and more – right out of the box.

Documentation could be improved:

While Luigi provides all the key documentation you need, it’s not always the easiest to find or navigate – this, when compared to tools such as dbt, makes documentation as a whole feel sparse in places, especially when dealing with more advanced features or plugins.

For instance, helpful features such as enabling dependency diagrams or tracking task history involves installing separate modules, which is a process that isn’t particularly well-explained in their official documentation.

In many instances, users may find themselves gaining the most clarity about how the tool works by trying things out and learning as they go.

Python path issues – everything must be clear or else Luigi will struggle to find it:

To avoid a barrage of ‘module not found’ errors, Luigi will need to know exactly where everything lives in your environment.

A workaround we found useful is creating a Shell Script that sets out all necessary paths and everything Luigi may possibly need to run successfully.

While something like this may take a little time to set up, it’s a small level of upfront effort to improve your workflow in Luigi and avoid any issues in the longer run.

Our top tips for getting started: (Data pipelines using Luigi)

- If one of your tasks fails, make sure you delete your output file before running it again. If Luigi registers an output file, it’ll automatically assume the task is done, and therefore skip it in the re-run, assuming it was completed successfully.

- To make up for Luigi’s limited frontend, we think it’s worth your time to set up your own custom run dashboard to monitor tasks and compensate for a UI which falls short of its competitors and doesn’t provide a tidy and complete overview of tasks.

- For a smooth and pain-free setup, we recommend using a Shell Script to handle Python paths and prevent any issues that may cause files from being easily located by Luigi.

- Be prepared to dive in and get your hands dirty to really understand how things work in Luigi. Documentation is thin in places or sometimes hard to find when compared to other tools on the market, so you may find there is a bit of a learning curve or trial and error process to be aware of.

Conclusion:

We think Luigi is a powerful data orchestration tool for anyone comfortable with Python, who has experience managing data pipelines, and is comfortable getting to grips with a few of its quirks that may make onboarding a bit challenging.

If you’re looking for an alternative to tools like Apache Airflow or Jenkins, Luigi is definitely worth trying out. While we recognise that its UI and documentation are lacking when compared to other tools in this space, we found that Luigi’s version controlling, dependency handling, and logic capabilities make it a handy tool for a range of our clients’ use cases.

For more information on how we can support your organisation with data pipelines and data orchestration - including custom builds, pipeline management, debugging and testing, and optimisation services - please feel free to contact us or explore the links below

About the author

Gareth Case

Gareth joined Lynchpin in 2021, coming from a background in Financial Services.

Gareth has experience in building out data warehousing and ETL solutions, for both on-premises and cloud platforms, having worked with Microsoft Azure and Google’s Cloud Platform.

Gareth also has experience in digital measurement including Adobe Analytics and Google Analytics, along with implementation via tag management systems.

Gareth holds a PhD in Mathematics and loves to automate everything he can!