Great: analytics has a new pointless buzzword.

Last week Lynchpin – well Andrew Hood to be accurate – spoke at JUMP 2012 in London. The topic was “Big Data – Does Size matter?”. Here is a synopsis of what he said (Full presentation PDF below).

If web analytics has always been “big” data are we getting diminishing returns on our accumulation of digital data? To look beyond the spin we need to consider some practical considerations vital to getting business value from this ever increasing “wealth of data”

- We want to measure every customer interaction, so why are we still so session focused in our analysis?

- What does “attribution” really mean?

- Do big questions need big data?

Maybe we should start with defining “Big Data”. This is the best we’ve seen.

“Big data is any data that: doesn’t fit well into tables and that generally responds poorly to manipulation by SQL.”

Mark Whitehorn – Chair of Analytics at the University of Dundee.

This definition is balanced by the results of survey of IT professionals who clearly don’t have a shared idea of what Big Data is and don’t believe it will replace our old RDBMS (Relational Databases).

If we accept Mark’s definition then we begin to see that web data is not actually Big Data.

To be Big Data it needs to fulfil 3 criteria these are:

- Volume – Large quantities of data in the terabyte if not the petabyte range

- Velocity – Streaming not batching

- Variety – Unstructured with no relationship

If we accept these criteria then web data does not comply. It can have volume (large sites) and it does have velocity. However, it is clearly structured and hence does not comply!

So, if Big Data is not the answer to getting more value out of our online data then what is?

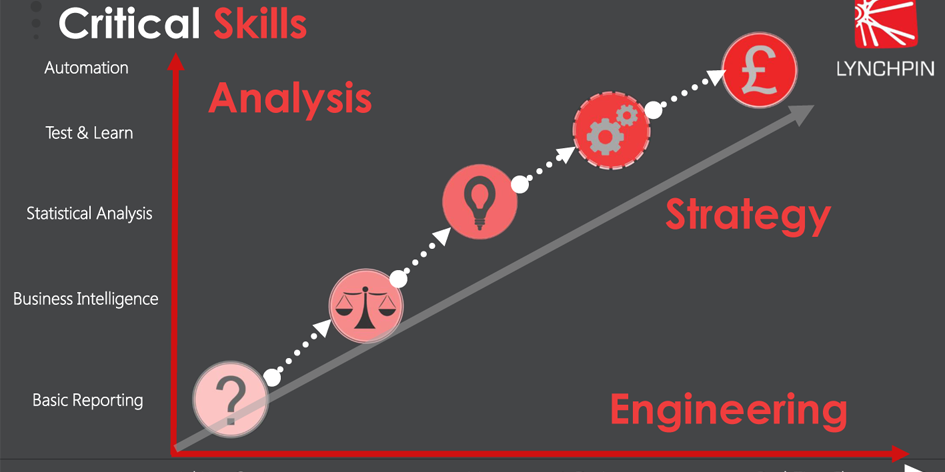

We need to consider and do the following

- Move beyond session-based models/metrics

- Extend our view of “attribution”

- Use relational databases properly

- Apply some good old statistics

And how do we do this?

First, we need to move away from the traditional web analytics tools. As these by there very nature destroy the relationship layers because they are session based.

Secondly, we need to broaden our attribution modelling to include offline customer data.

Thirdly, we need to start using tools to allow us the freedom to deliver all the above. And, guess what? The old trusty Relational Database does exactly that. They are free and powerful.

Finally, we need to turn to statistics. “Big Data” stores do not have magical built-in analytical capabilities (exception: some standardised algorithms for things like fraud detection are emerging). Making sense of data big and small is going to need some established statistical techniques:

- Propensity modelling

- Association/correlation analysis

- Identifying statistically significant changes/trends

So we now are reaching convergence. One common complaint in digital is the struggle to recruit decent “web analysts”. By contrast, there is an established industry of data analysts with skills in: Relational databases and Statistical modelling. If less of our web data was locked up in proprietary data models, those skills suddenly become exceptionally valuable.

So in conclusion:

- Take a reality check on “big”

- CPU and storage capabilities growing much faster than data points in clickstream

- Most web data is highly structured and relational (the opposite of “big data”)

- Established systems and skills are going to be key to unlocking more value in the short-medium term

- Relational databases and BI slice-and-dice tools

- Statistical modelling techniques

Download the full presentation here Lynchpin JUMP 2012

About the author

Andrew Hood

As CEO, Andrew leads the talented team at Lynchpin to deliver great work for our clients across data strategy, science and engineering.

With a Masters in Information Systems Engineering, Andrew scaled technical systems behind the first internet hotel reservation systems during the dotcom boom before shifting into developing web analytics and marketing tracking solutions and subsequently founding Lynchpin in 2005.

As well as leading the team at Lynchpin, Andrew acts as Econsultancy's lead consultant, trainer and author on the topic of analytics, lectures on the MSc in Digital Marketing at Manchester Metropolitan University and is a Non-Executive Director at enterprise crypto firm Zumo.